-

Olympic Games in northern Italy have German twist

Olympic Games in northern Italy have German twist

-

Bad Bunny: the Puerto Rican phenom on top of the music world

-

Snapchat blocks 415,000 underage accounts in Australia

Snapchat blocks 415,000 underage accounts in Australia

-

At Grammys, 'ICE out' message loud and clear

-

Dalai Lama's 'gratitude' at first Grammy win

Dalai Lama's 'gratitude' at first Grammy win

-

Bad Bunny makes Grammys history with Album of the Year win

-

Stocks, oil, precious metals plunge on volatile start to the week

Stocks, oil, precious metals plunge on volatile start to the week

-

Steven Spielberg earns coveted EGOT status with Grammy win

-

Knicks boost win streak to six by beating LeBron's Lakers

Knicks boost win streak to six by beating LeBron's Lakers

-

Kendrick Lamar, Bad Bunny, Lady Gaga triumph at Grammys

-

Japan says rare earth found in sediment retrieved on deep-sea mission

Japan says rare earth found in sediment retrieved on deep-sea mission

-

San Siro prepares for last dance with Winter Olympics' opening ceremony

-

France great Benazzi relishing 'genius' Dupont's Six Nations return

France great Benazzi relishing 'genius' Dupont's Six Nations return

-

Grammy red carpet: black and white, barely there and no ICE

-

Oil tumbles on Iran hopes, precious metals hit by stronger dollar

Oil tumbles on Iran hopes, precious metals hit by stronger dollar

-

South Korea football bosses in talks to avert Women's Asian Cup boycott

-

Level playing field? Tech at forefront of US immigration fight

Level playing field? Tech at forefront of US immigration fight

-

British singer Olivia Dean wins Best New Artist Grammy

-

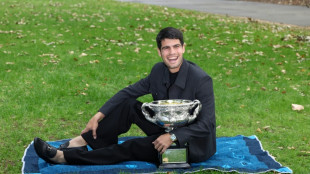

Hatred of losing drives relentless Alcaraz to tennis history

Hatred of losing drives relentless Alcaraz to tennis history

-

Kendrick Lamar, Bad Bunny, Lady Gaga win early at Grammys

-

Surging euro presents new headache for ECB

Surging euro presents new headache for ECB

-

Djokovic hints at retirement as time seeps away on history bid

-

US talking deal with 'highest people' in Cuba: Trump

US talking deal with 'highest people' in Cuba: Trump

-

UK ex-ambassador quits Labour over new reports of Epstein links

-

Trump says closing Kennedy Center arts complex for two years

Trump says closing Kennedy Center arts complex for two years

-

Reigning world champs Tinch, Hocker among Millrose winners

-

Venezuelan activist ends '1,675 days' of suffering in prison

Venezuelan activist ends '1,675 days' of suffering in prison

-

Real Madrid scrape win over Rayo, Athletic claim derby draw

-

PSG beat Strasbourg after Hakimi red to retake top spot in Ligue 1

PSG beat Strasbourg after Hakimi red to retake top spot in Ligue 1

-

NFL Cardinals hire Rams' assistant LaFleur as head coach

-

Arsenal scoop $2m prize for winning FIFA Women's Champions Cup

Arsenal scoop $2m prize for winning FIFA Women's Champions Cup

-

Atletico agree deal to sign Lookman from Atalanta

-

Real Madrid's Bellingham set for month out with hamstring injury

Real Madrid's Bellingham set for month out with hamstring injury

-

Man City won't surrender in title race: Guardiola

-

Korda captures weather-shortened LPGA season opener

Korda captures weather-shortened LPGA season opener

-

Czechs rally to back president locking horns with government

-

Prominent Venezuelan activist released after over four years in jail

Prominent Venezuelan activist released after over four years in jail

-

Emery riled by 'unfair' VAR call as Villa's title hopes fade

-

Guirassy double helps Dortmund move six points behind Bayern

Guirassy double helps Dortmund move six points behind Bayern

-

Nigeria's president pays tribute to Fela Kuti after Grammys Award

-

Inter eight clear after win at Cremonese marred by fans' flare flinging

Inter eight clear after win at Cremonese marred by fans' flare flinging

-

England underline World Cup

credentials with series win over Sri Lanka

-

Guirassy brace helps Dortmund move six behind Bayern

Guirassy brace helps Dortmund move six behind Bayern

-

Man City held by Solanke stunner, Sesko delivers 'best feeling' for Man Utd

-

'Send Help' debuts atop N.America box office

'Send Help' debuts atop N.America box office

-

Ukraine war talks delayed to Wednesday, says Zelensky

-

Iguanas fall from trees in Florida as icy weather bites southern US

Iguanas fall from trees in Florida as icy weather bites southern US

-

Carrick revels in 'best feeling' after Man Utd leave it late

-

Olympic chiefs admit 'still work to do' on main ice hockey venue

Olympic chiefs admit 'still work to do' on main ice hockey venue

-

Pope says Winter Olympics 'rekindle hope' for world peace

Is AI's meteoric rise beginning to slow?

A quietly growing belief in Silicon Valley could have immense implications: the breakthroughs from large AI models -– the ones expected to bring human-level artificial intelligence in the near future –- may be slowing down.

Since the frenzied launch of ChatGPT two years ago, AI believers have maintained that improvements in generative AI would accelerate exponentially as tech giants kept adding fuel to the fire in the form of data for training and computing muscle.

The reasoning was that delivering on the technology's promise was simply a matter of resources –- pour in enough computing power and data, and artificial general intelligence (AGI) would emerge, capable of matching or exceeding human-level performance.

Progress was advancing at such a rapid pace that leading industry figures, including Elon Musk, called for a moratorium on AI research.

Yet the major tech companies, including Musk's own, pressed forward, spending tens of billions of dollars to avoid falling behind.

OpenAI, ChatGPT's Microsoft-backed creator, recently raised $6.6 billion to fund further advances.

xAI, Musk's AI company, is in the process of raising $6 billion, according to CNBC, to buy 100,000 Nvidia chips, the cutting-edge electronic components that power the big models.

However, there appears to be problems on the road to AGI.

Industry insiders are beginning to acknowledge that large language models (LLMs) aren't scaling endlessly higher at breakneck speed when pumped with more power and data.

Despite the massive investments, performance improvements are showing signs of plateauing.

"Sky-high valuations of companies like OpenAI and Microsoft are largely based on the notion that LLMs will, with continued scaling, become artificial general intelligence," said AI expert and frequent critic Gary Marcus. "As I have always warned, that's just a fantasy."

- 'No wall' -

One fundamental challenge is the finite amount of language-based data available for AI training.

According to Scott Stevenson, CEO of AI legal tasks firm Spellbook, who works with OpenAI and other providers, relying on language data alone for scaling is destined to hit a wall.

"Some of the labs out there were way too focused on just feeding in more language, thinking it's just going to keep getting smarter," Stevenson explained.

Sasha Luccioni, researcher and AI lead at startup Hugging Face, argues a stall in progress was predictable given companies' focus on size rather than purpose in model development.

"The pursuit of AGI has always been unrealistic, and the 'bigger is better' approach to AI was bound to hit a limit eventually -- and I think this is what we're seeing here," she told AFP.

The AI industry contests these interpretations, maintaining that progress toward human-level AI is unpredictable.

"There is no wall," OpenAI CEO Sam Altman posted Thursday on X, without elaboration.

Anthropic's CEO Dario Amodei, whose company develops the Claude chatbot in partnership with Amazon, remains bullish: "If you just eyeball the rate at which these capabilities are increasing, it does make you think that we'll get there by 2026 or 2027."

- Time to think -

Nevertheless, OpenAI has delayed the release of the awaited successor to GPT-4, the model that powers ChatGPT, because its increase in capability is below expectations, according to sources quoted by The Information.

Now, the company is focusing on using its existing capabilities more efficiently.

This shift in strategy is reflected in their recent o1 model, designed to provide more accurate answers through improved reasoning rather than increased training data.

Stevenson said an OpenAI shift to teaching its model to "spend more time thinking rather than responding" has led to "radical improvements".

He likened the AI advent to the discovery of fire. Rather than tossing on more fuel in the form of data and computer power, it is time to harness the breakthrough for specific tasks.

Stanford University professor Walter De Brouwer likens advanced LLMs to students transitioning from high school to university: "The AI baby was a chatbot which did a lot of improv'" and was prone to mistakes, he noted.

"The homo sapiens approach of thinking before leaping is coming," he added.

S.Keller--BTB